Real-time signals for sales lead prioritization

Harness the power of AI to find real-time sales data. Our AI agents enable you to leverage your deep customer insights to find up-to-date info on leads. Whatever signals you use for prioritization, we have you covered.

Get 2x better leads, 2x faster, with 0 effort

SDRs should spend time selling, not researching.

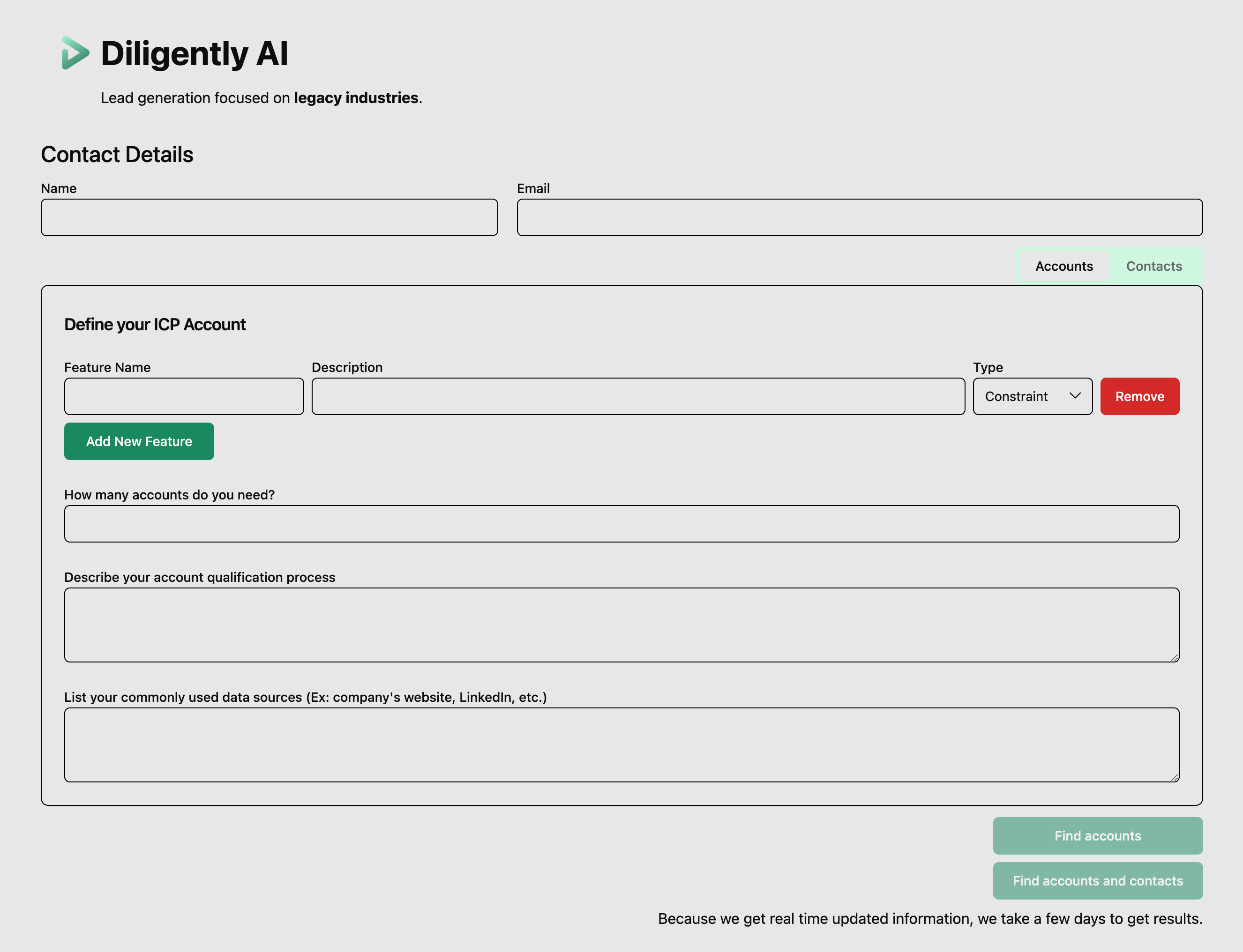

Automatically find the best accounts to target based on your ideal customer profile.

Sales Consulting - for when a product is just a product

Products are "one-size-fits-all". When "one size" isn't the right size, we come in with our sales consulting services. We'll help you get back on track.

Post-Mortem Reporting

Unearth the "why" behind your sales.

Our data science foundation means we find all the patterns hidden in your successes and failures.

Rapid Hypothesis Testing

Determine the best course of action.

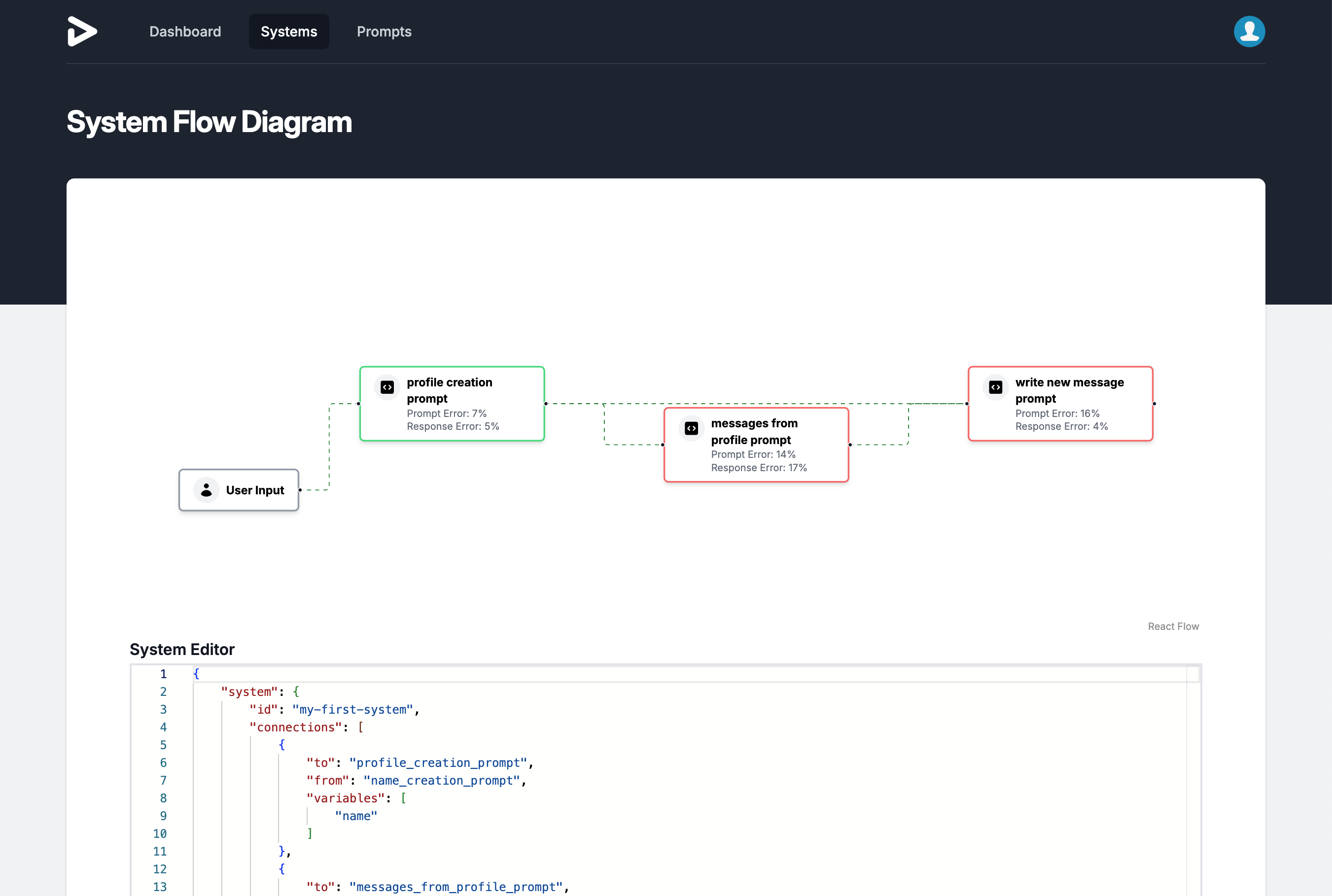

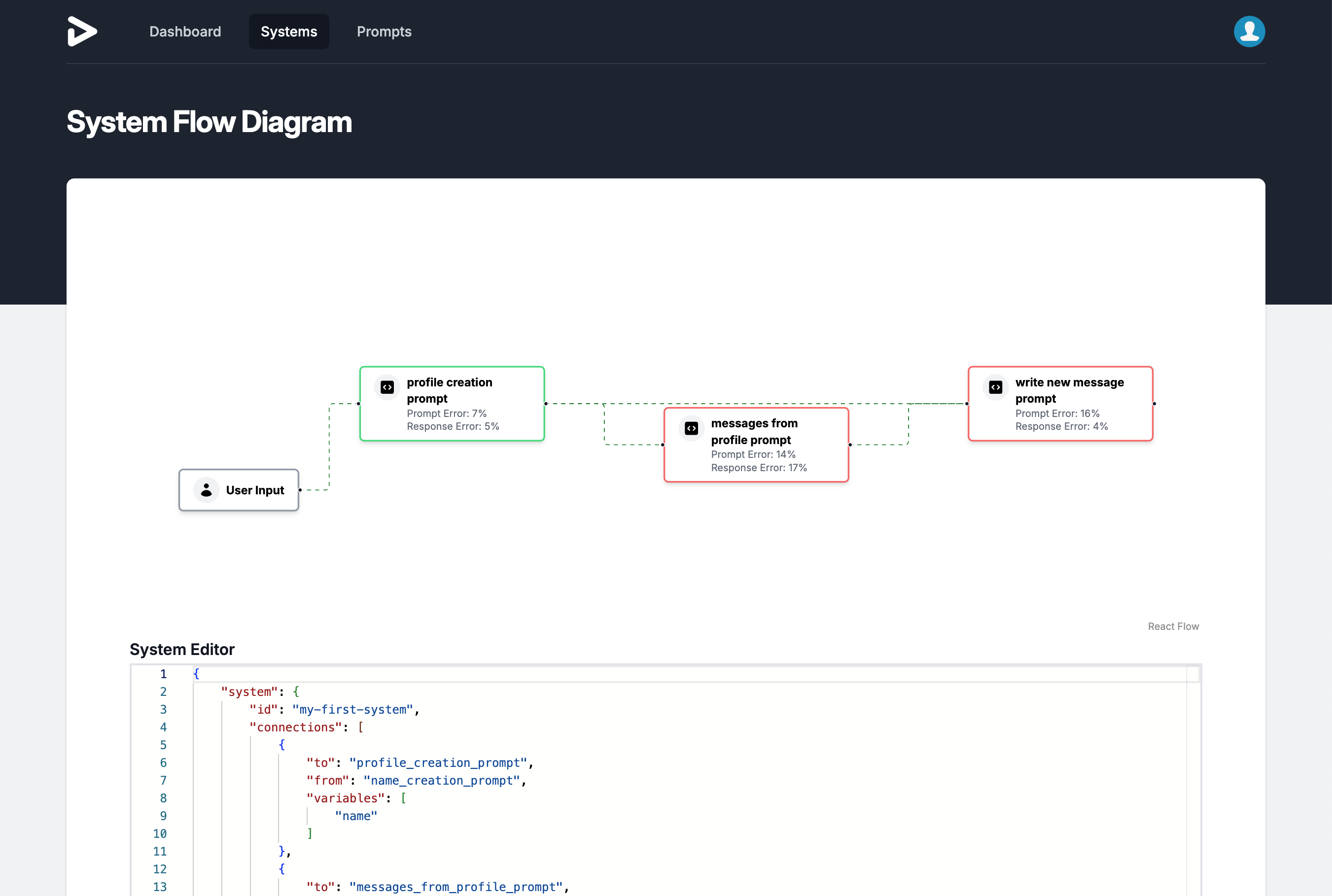

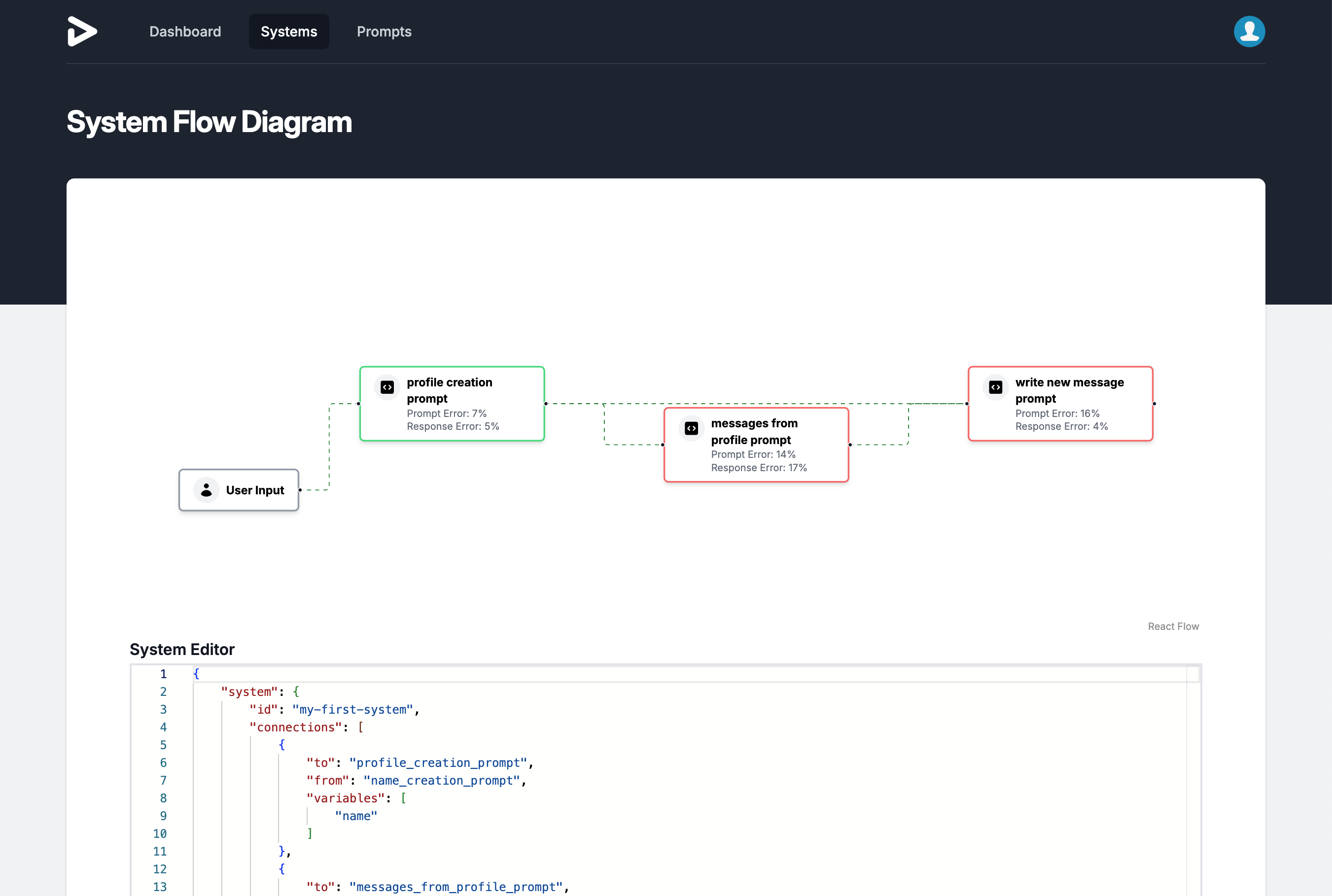

We leverage our AI agents to test your hypotheses rapidly. This scales tests enough to get meaningful data.

Bespoke Solutions

Custom-built for your business.

Because we are engineers first, we can build custom solutions to fit your unique business needs.

Unearth the "why" behind your sales.

Our data science foundation means we find all the patterns hidden in your successes and failures.

Determine the best course of action.

We leverage our AI agents to test your hypotheses rapidly. This scales tests enough to get meaningful data.

Custom-built for your business.

Because we are engineers first, we can build custom solutions to fit your unique business needs.